Re: unknown (solid?) caps on MSI "military class" graphics card?

tape or glue a k-probe to a fet or coil - coils can get hot too.

but why?

unless your going to plan on replacing the fets with ones that have a lower on-resistance.

tape or glue a k-probe to a fet or coil - coils can get hot too.

but why?

unless your going to plan on replacing the fets with ones that have a lower on-resistance.

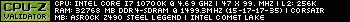

nVidia RTX 3080 TI, Corsair RM750I.

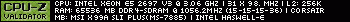

nVidia RTX 3080 TI, Corsair RM750I.

Comment